Batch Normalization was proposed by Ioffe and Szegedy

in 2015, and it spawned several normalization techniques that are used in SOTA

models today (layer norm, weight norm, etc). Batch normalization normalizes

the output of each layer based on the mean and variance of the examples in the

current batch. Formally, if

Why?

When networks are trained, the partial derivative of a parameter obtained during backprop gives us the gradient assuming all other parameters remain constant. This is not true, as the parameters simultaneously change. This causes the input distribution to a layer in a deep neural network to change significantly from update to update, and this is called Internal Covariate Shift.

Mathematically, if we have a simple linear model

Batch norm aims to reduce internal covariate shift by normalizing the layers.

This centers them and reduces variance due to noisy parameter updates, while not

taking any information away (the model is still free to learn whatever mean

and variance it chooses to learn by updating

Backprop

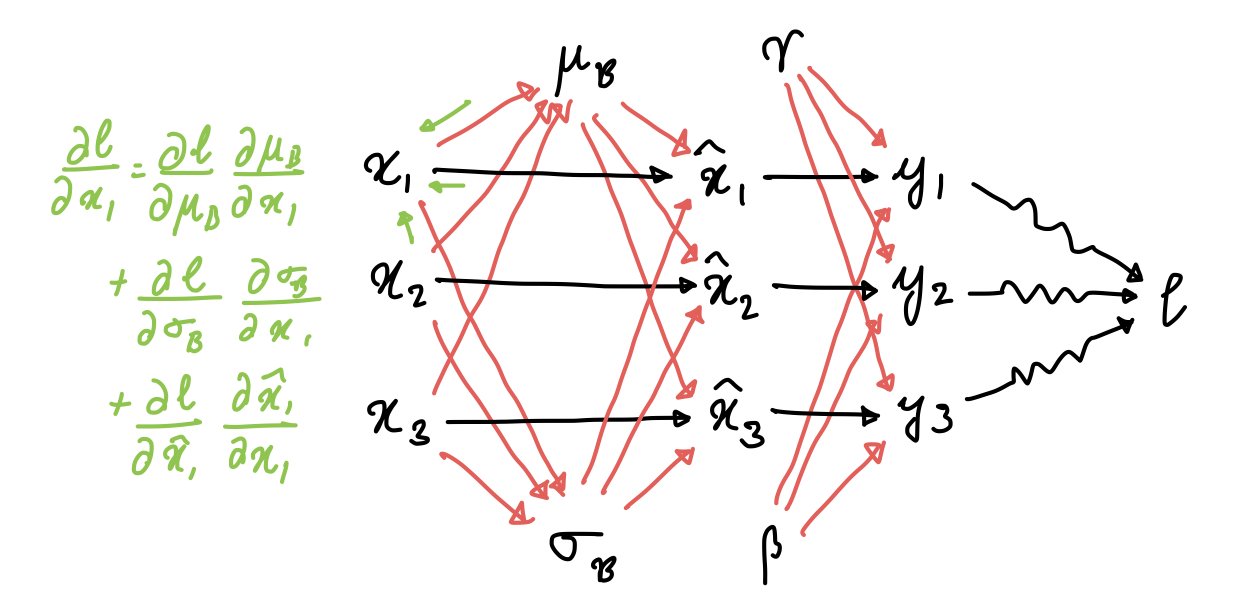

Before manually doing the backprop, a computation graph really helps here.

This is a small computation graph that uses just three examples, but it’s sufficient to show which variables are dependent on each other, and how. This allows us to go ahead and obtain all the partial derivatives via chain rule:

The next three are a bit tricky. To check the derivation, just follow the edges of the computation graph.

This is what the paper mentions as well. With a few modifications, the derivatives

here can be vectorized:

Deeper Intuition

This very nice paper by Kohler et al shows that Batch Normalization (and friends) may be thought of as a reparameterization of the weight space. In matrix notation, The normalization operation amounts to computing the following:

Assuming

where S is the covariance matrix of

Does it work?

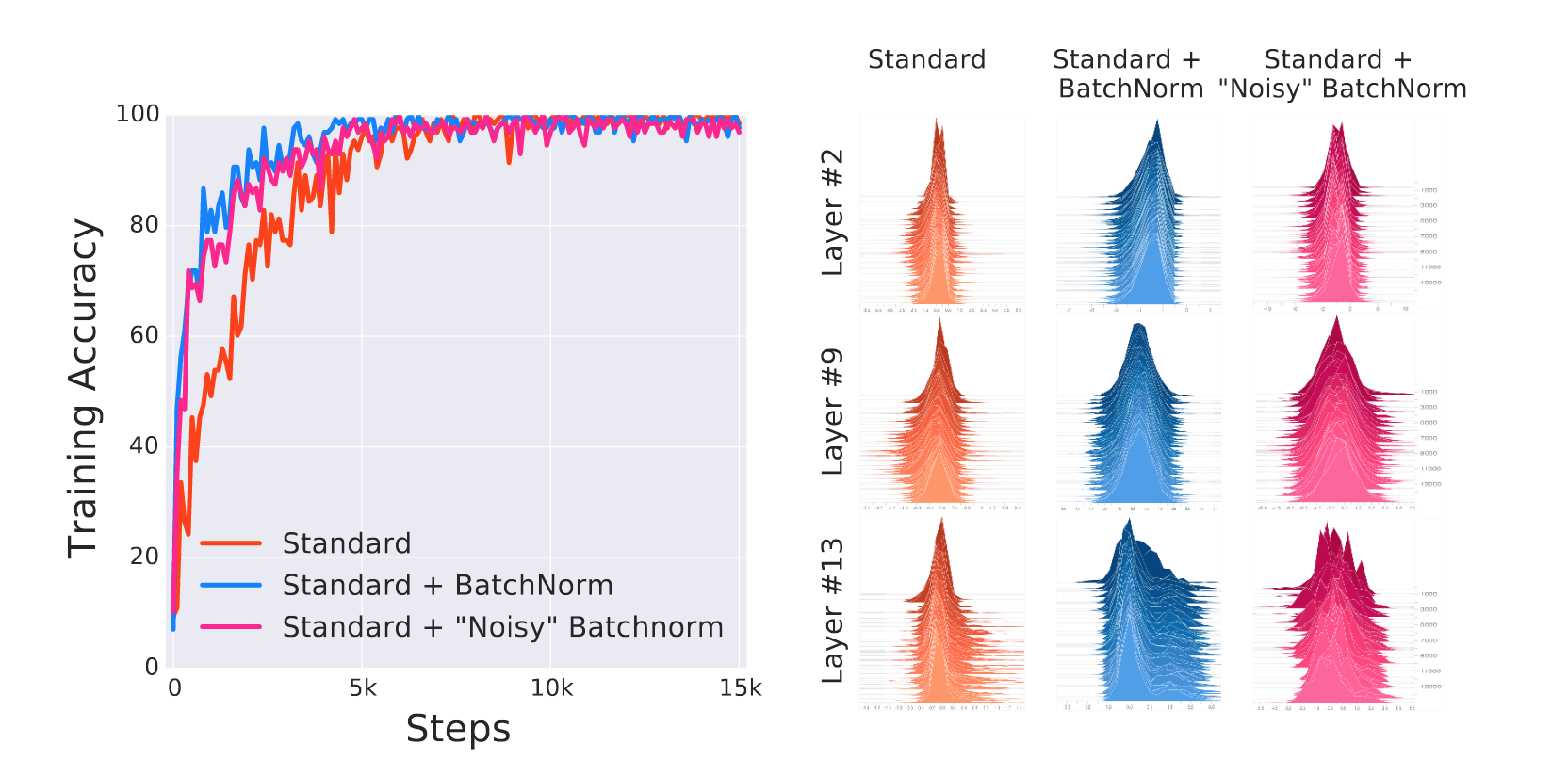

According to newer work, yes but no. Santurkar et al show that Internal Covariate Shift is not as detrimental to learning as thought of, by synthetically injecting noise after BatchNorm layers. The result was that the network doesn’t do as poorly as expected, but it still outperforms the standard network.

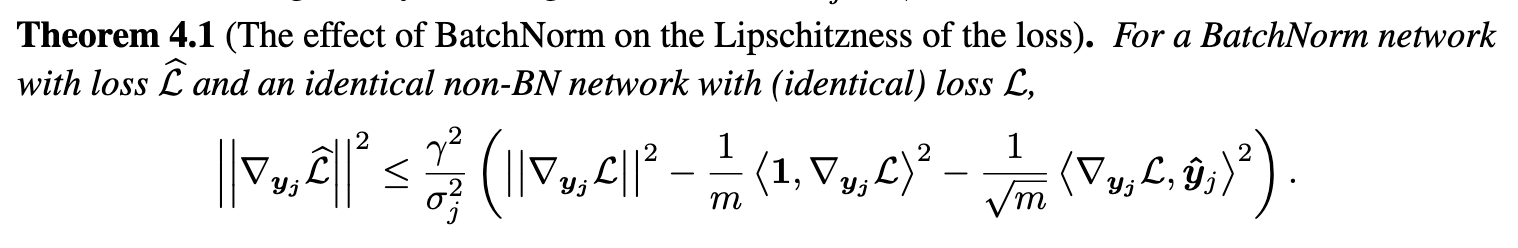

The explanation that they gave was that Batch norm assists the optimizer by

making the loss landscape smoother. Formally, the magnitude of the gradient of

the loss

Batch norm also imposes some second-order constraints on the hessian, which can intuitively be summarized as saying that the step we take in the direction of the gradient is more likely to lead us to a minima for a batch-normalized network compared to an unnormalized network.