Motivation

Most linear regression models assume

The standard closed form for this, obtained via finding the MLE estimate, gives

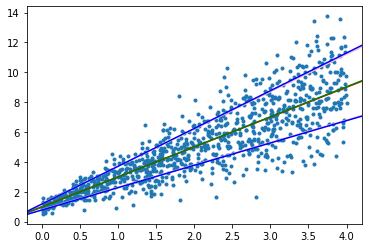

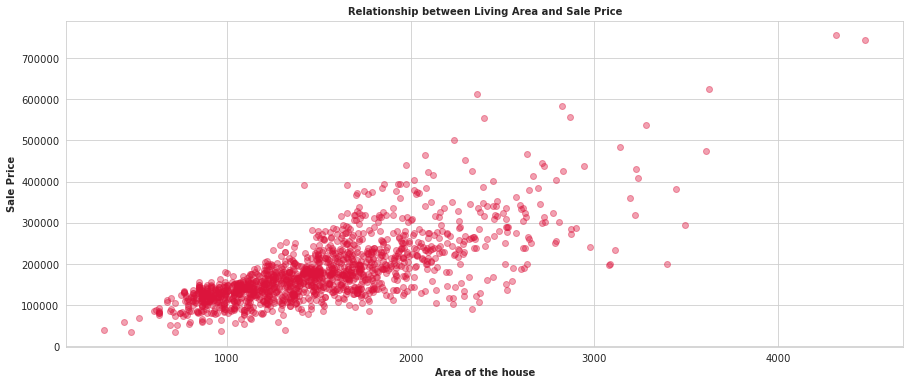

However, the variance need not always be constant. Consider a model where the variance is varying linearly (or rather just increasing) with

While there are feature transformations that would make this less heteroscedastic, this article focuses on learning the variance parameters, so along with our regression estimate, we can provide a variance estimate as well

The Model

We assume that the standard deviation (not variance) is a linear function of

The log-likelihood function is hence

Differentiating with respect to

Rearranging this gives us, in a matrix form,

Where

The derivative with respect to

An Implementation

Implementation was fairly straightforward, using gradient descent, and it converged nicely to some generated data