| Task | #11 |

|---|---|

| Position | 8 |

| Score | 24,225 |

| Best Score | 21,477 |

| Points | 0.887 |

Problem Statement

You have a data stream of uint32 numbers in binary representation sending to STDIN. The byte order is little endian.

Try to find the sum of the top 100 greatest numbers for the shortest time.

Numbers quantity is 100 000 000.

Input Generator

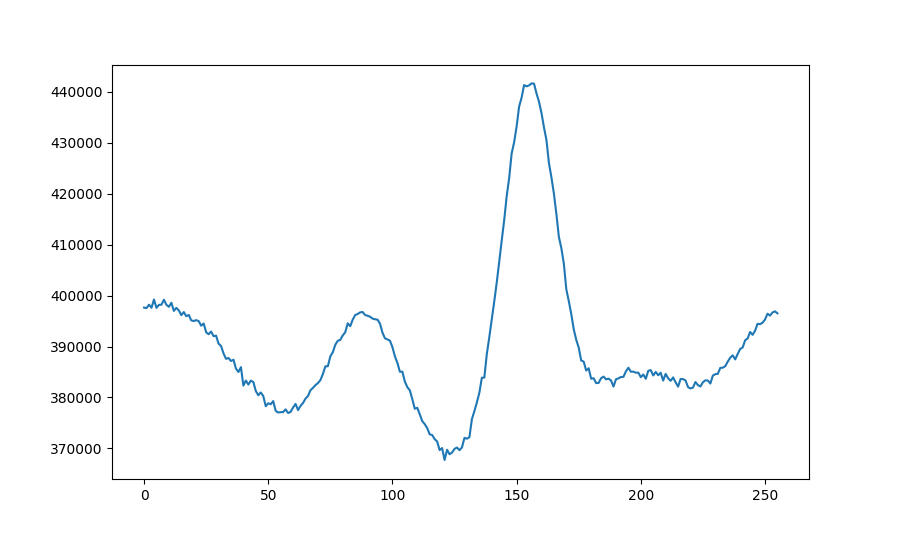

The input generator is (to nobody’s surprise) NOT normal!

Looks like a mixture of normals. I’m not sure how it’s distributed in time though. Maybe you can get away with not using a heap by doing some form of distribution estimation? I don’t think it’ll be effective enough to filter out a huge chunk of numbers though, and the variance is just enough to trip you over if you’re naively filtering with a min bound of UINT_MAX * 1e-6 - DELTA.

Solution Sketch

Use a min-heap with a capacity of 100. if the next number is greater than the minimum value of the heap, remove the min and insert this new number. It’s easy to vectorize this comparision, and since you’re discarding 99.9% of the things, it devolves into yet another memory bandwidth optimization problem.

Thoughts

I haven’t given the data enough time or thought, the first time I actually examined the distribution is now, when I was writing this summary up. Maybe it’s worth a revisit given I haven’t explored the time evolution of the data stream?